1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

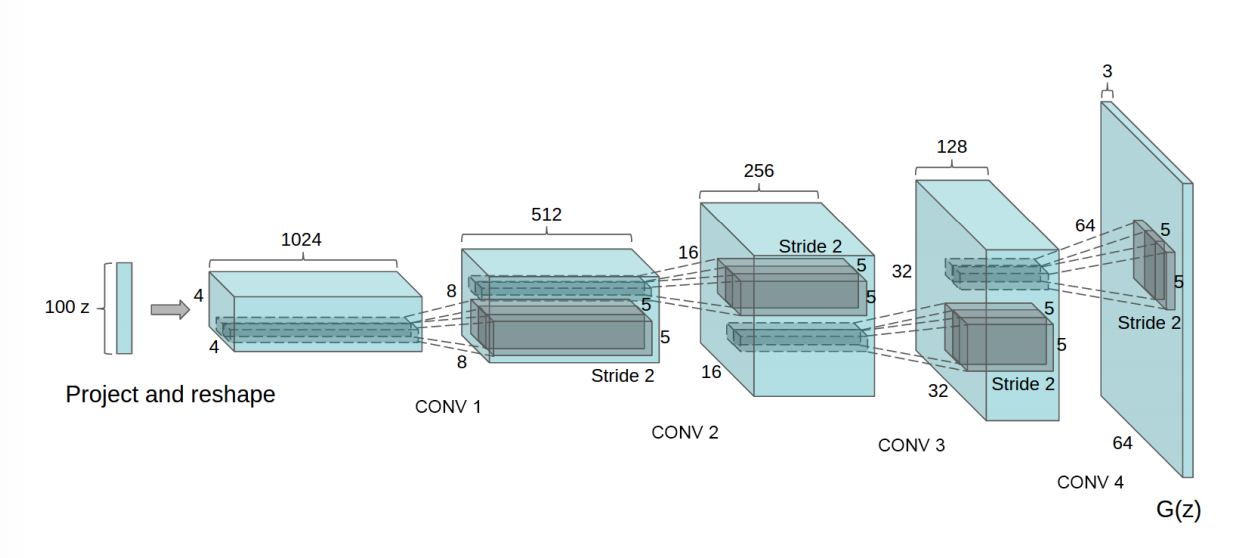

| class Generator_model(tf.keras.Model):

def __init__(self):

super().__init__()

self.dense=tf.keras.layers.Dense(7*7*256,use_bias=False)

self.bn1=tf.keras.layers.BatchNormalization()

self.leakyrelu1=tf.keras.layers.LeakyReLU()

self.reshape=tf.keras.layers.Reshape((7,7,256))

self.convT1=tf.keras.layers.Conv2DTranspose(128,(5,5),strides=(1,1),padding='same',use_bias=False)

self.bn2=tf.keras.layers.BatchNormalization()

self.leakyrelu2=tf.keras.layers.LeakyReLU()

self.convT2=tf.keras.layers.Conv2DTranspose(64,(5,5),strides=(2,2),padding='same',use_bias=False)

self.bn3=tf.keras.layers.BatchNormalization()

self.leakyrelu3=tf.keras.layers.LeakyReLU()

self.convT3=tf.keras.layers.Conv2DTranspose(1,(5,5),strides=(2,2),padding='same',use_bias=False,activation='tanh')

def call(self,inputs,training=True):

x=self.dense(inputs)

x=self.bn1(x,training)

x=self.leakyrelu1(x)

x=self.reshape(x)

x=self.convT1(x)

x=self.bn2(x,training)

x=self.leakyrelu2(x)

x=self.convT2(x)

x=self.bn3(x,training)

x=self.leakyrelu3(x)

x=self.convT3(x)

return x

|